Recently, the issue of K-pop deepfakes has escalated significantly, with numerous incidents highlighting the exploitation of K-pop idols through the creation and distribution of explicit deepfake content.

Hundreds of female K-pop idols have been targeted, with reports indicating that over 53% of individuals featured in deepfake pornography are Korean singers and actresses. Major groups like Twice, NewJeans, and Blackpink have been specifically mentioned as victims.

Overview of Kpop DeepFakes incidents

June 2024: Agencies like ADOR (NewJeans) begin warning about the legal repercussions of deepfake videos featuring their artists. The issue gains traction as more victims come forward.

August 2024: Reports reveal that over 53% of individuals in deepfake pornography are Korean singers and actresses. Awareness grows, with fans rallying against the exploitation of idols on social media platforms.

August 29, 2024: The term “New Nth Room” is referenced in discussions about deepfake pornography, linking it to a previous scandal involving the distribution of sexual content. Fans express outrage and call for collective action to protect idols.

September 1, 2024: JYP Entertainment (Twice) publicly announces its commitment to legal action against creators and distributors of deepfake videos involving their artists, labeling such content as “irrefutably illegal.”

September 2, 2024: YG Entertainment also declares its intention to take legal measures against deepfake pornography, emphasizing the need for strict enforcement against this growing issue.

September 6, 2024: South Korean authorities initiate a crackdown on sexually exploitative deepfakes, launching investigations into platforms like Telegram for facilitating the distribution of these materials.

September 11, 2024: Major K-pop agencies collectively declare a war on deepfake visual content, as reports indicate a significant increase in cases targeting K-pop stars. The police report a sharp rise in digital sex crimes involving fake imagery.

By September 2024, police data indicates that there were 297 reported cases of sexually explicit deepfake crimes in just the first seven months of the year, a significant increase compared to previous years.

What is DeepFake?

Deepfake technology refers to the use of artificial intelligence to create highly realistic fake images, videos, or audio recordings.

The term is a combination of “deep learning,” a subset of AI, and “fake.”

It is a synthetic media product where a person’s likeness or voice is manipulated to make it appear as though they are saying or doing something they did not actually say or do. This manipulation can involve swapping faces in videos or altering audio to match the video content.

How DeepFake Works?

Deepfake technology operates through advanced artificial intelligence techniques, primarily utilizing Generative Adversarial Networks (GANs) and autoencoders.

Here’s a detailed explanation of how deepfakes are created:

Data Collection

The first step in creating a deepfake involves gathering a substantial dataset of images, videos, or audio recordings of the target individual.

This dataset should be diverse, capturing various angles, expressions, and movements to ensure the final product appears realistic.

Model Training

Once the data is collected, deep learning algorithms are employed to train the AI model. This process involves two main types of neural networks:

Autoencoders: These neural networks learn to recognize the person’s key facial features and expressions.

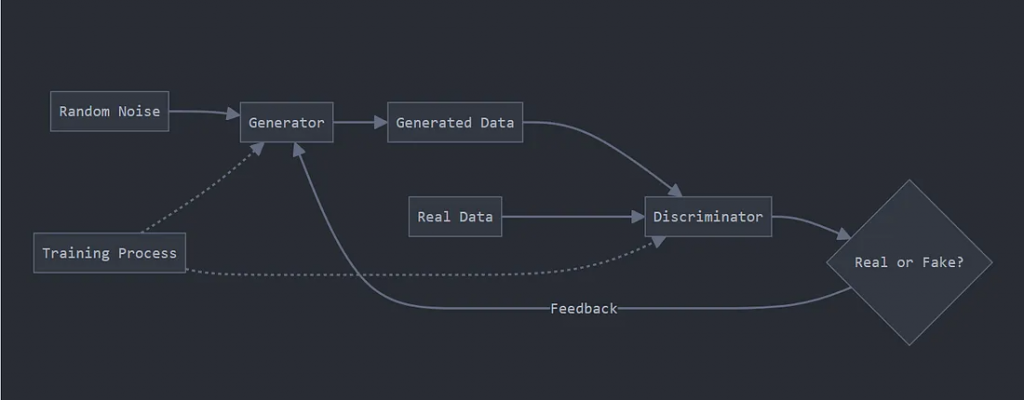

Generative Adversarial Networks (GANs): A GAN consists of two competing neural networks—a generator and a discriminator. The generator creates fake content (e.g., images or videos), while the discriminator evaluates the authenticity of this content. This adversarial relationship improves both networks over time, with the generator producing increasingly realistic outputs.

Content Generation

After training, the model can generate new content by superimposing the target’s features onto another person’s body or creating entirely new audio using the target’s voice. The generator uses patterns learned during training to create convincing fakes.

Face Swapping: In video deepfakes, the model swaps the face of one person with that of another while maintaining realistic movements and expressions.

Lip Syncing: For audio deepfakes, the GAN analyzes speech patterns to generate audio that matches lip movements in a video, making it appear as though the person is speaking words they never actually said.

Refinement

The initial output from a deepfake model is often imperfect. Therefore, an iterative refinement process follows, where adjustments are made based on feedback from the discriminator or manual corrections to enhance realism.

How Were Kpop DeepFakes Created?

In today’s rapidly advancing AI technology landscape, creating a deepfake is not difficult.

There are still many websites and open-source models that offer free image and video face-swapping services.

However, not all websites and open-source models provide deepfake porn services.

*Disclaimer: The websites used in the following demo are only for showcasing face-swapping functionality and do not support deepfake porn services.

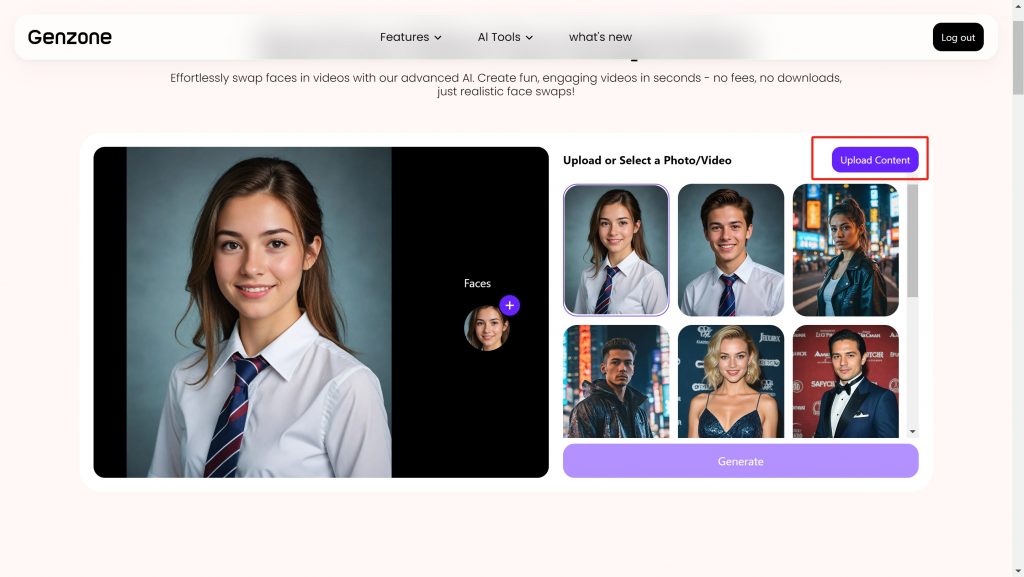

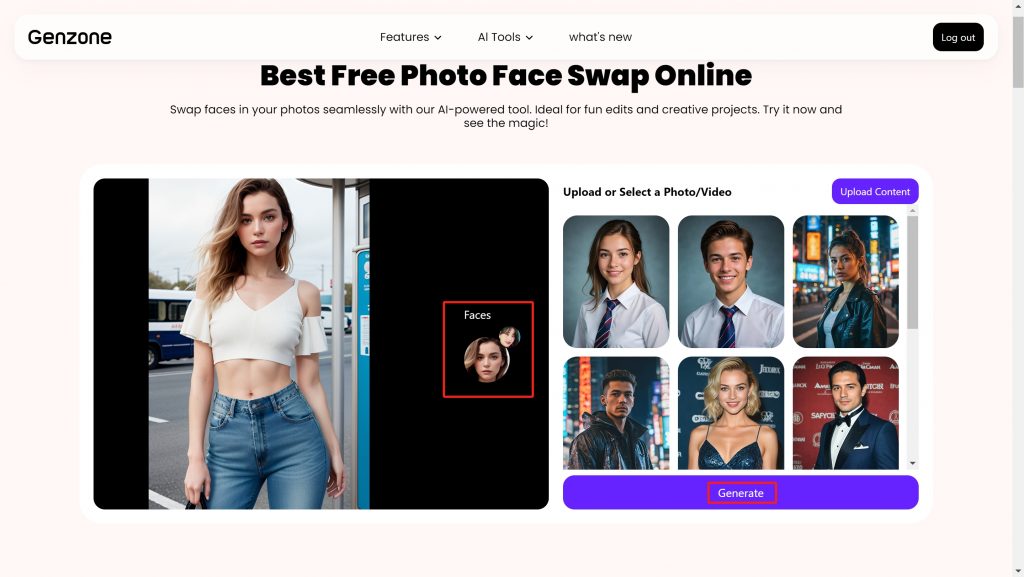

(1) Visit genzone.ai/video-face-swap sign up or sign in, it will provide you with 2 free trials.

(2) Click ‘upload content’ to upload the target video

(3) Click ‘Faces’ on the right and ‘Add Face’ to upload the portrait of Brooke Monk’s face.

(4) Click ‘Generate’ and wait for a few seconds you’ll have the result. You can download the video directly.

The Harms and Damages to Kpop Deepfake Victims

Psychological and Emotional Distress

Victims often suffer severe psychological trauma from the non-consensual sexualization in deepfake videos.

Knowing their likeness has been used for explicit content can lead to feelings of violation, anxiety, and depression.

Many idols feel unsafe and vulnerable in an industry already full of intense scrutiny.

Damage to Reputation

These fake videos can distort their professional personas, leading to public backlash, loss of fan support, and potential career damage.

The stigma from being featured in such content can overshadow their artistic achievements.

Legal Consequences

Creating and distributing deepfake pornography is illegal in South Korea, but enforcement is challenging.

Victims may face lengthy and emotionally taxing legal battles as agencies work to protect their rights.

Social Stigmatization

Victims often face social ostracism or harassment, worsening their feelings of isolation. The intense scrutiny of idols’ personal lives in K-pop fandom can make them more vulnerable to negative public perceptions when involved in such scandals.

Increased Vulnerability for Minors

Many victims are minors or young adults, raising additional concerns about their safety and well-being. A significant percentage of deepfake victims are teenagers, who may lack the resources or support systems to cope with such violations.

Broader Societal Implications

The prevalence of deepfakes contributes to a culture of misogyny and exploitation in digital spaces, affecting not only K-pop idols but also women in general. This trend highlights the need for stronger legal protections and societal awareness about consent and digital rights.

Why Are Female Idols the Main Targets of Kpop DeepFake?

A Double Standard

Female K-pop stars live under strict rules. Many aren’t allowed to date openly or talk about relationships. At the same time, research shows they face much more sexual objectification than male K-pop stars.

The ‘Anti-fans’ Problem

This unfair treatment may explain why female K-pop stars are targeted more often by fake AI-generated images (deepfakes). Dr. Hye Jin Lee from USC’s Annenberg School suggests these attacks might come from “anti-fans” – people who actively dislike certain stars.

Image Matters

In K-pop, image is everything – especially for women. Female stars must maintain a perfect, clean image to protect their careers. This makes them vulnerable to people who want to harm their reputation through fake images.

How to Spot Kpoop Deepfakes?

Detecting deepfakes can be tricky, but there are several methods and signs to help identify manipulated content. Here’s a simple guide:

Visual Cues

Facial Inconsistencies: Look for unnatural facial expressions or movements. Deepfakes often struggle with subtle human expressions, leading to awkward or stiff appearances.

Eye Behavior: Pay attention to blinking. Deepfakes may have irregular blinking patterns or no blinking at all, as AI struggles to simulate this natural behavior.

Lip Sync Errors: Watch for mismatches between spoken words and lip movements. Delays or advances in lip movements, especially with sounds like “p,” “b,” and “m,” are common in deepfakes.

Lighting and Shadows: Check for inconsistent lighting and shadows. Authentic videos have coherent lighting that matches the environment, while deepfakes may show conflicting shadows or unnatural light sources.

Background Inconsistencies: Scrutinize the background for disjointed motion or artifacts that don’t align with the foreground. Look for visual noise, unusual patterns, or pixelation that suggests digital tampering.

Audio Cues

Inconsistent Audio Quality: Check for discrepancies in audio quality or synchronization with video. If the audio sounds off compared to the visual context, it could be a sign of manipulation.

Technical Tools

Detection Software: Use AI-powered tools designed to detect deepfakes. Some notable options include:

• Intel’s FakeCatcher: Analyzes videos for anomalies indicative of manipulation.

• Deepware Scanner: Allows users to upload videos for immediate analysis.• Sensity: Monitors online platforms for deepfake content in real-time.

General Best Practices

Source Verification: Check the source of the multimedia file. If it comes from an unreliable platform or lacks proper attribution, it may be more likely to be manipulated.

Zooming In: Magnify the video to reveal oddities not visible at normal size, such as unnatural coloring or irregular facial features.

Cross-Referencing Information: If the content seems suspicious, verify it against reputable news sources or fact-checking websites.

By combining these visual cues, audio checks, and technical tools, you can better spot deepfakes and protect yourself from misinformation.

Why Current Laws Aren’t Enough in Dealing with Kpop Deepfake?

Between 2020 and 2023, Korean courts made 71 decisions about deepfake crimes.

Surprisingly, only 4 cases led to jail time just for deepfake offenses. This shows how hard it is to enforce strong punishments for these crimes.

Big Gaps in the Law

There’s a serious problem with current laws. While making and sharing deepfakes is illegal, simply watching or downloading them isn’t. Legal expert Kim Ye-eun points out that this is very different from other digital crime laws, which usually punish:

- Creating illegal content

- Sharing illegal content

- Keeping illegal content

- Watching illegal content

What Needs to Change?

Experts suggest two main solutions:

- Better Technology

- We need better ways to spot deepfake videos

- Systems should be able to identify fake content quickly

- Social Change

- People need to learn about deepfake risks

- Society must understand why watching deepfakes is wrong

- Everyone should know the legal consequences

Looking Forward

As technology continues to advance, it is expected that it will become increasingly difficult to distinguish deepfake content with the naked eye. In addition to continuously updating detection technologies and strengthening laws and penalties for illegal content, countries should collaborate to combat related crimes.

The public should also shift their old perceptions and stop believing that everything they see online is real. By questioning the authenticity of images, audio, and videos on the internet, we can potentially minimize the harm caused by deepfakes.

- Guide to Access and Use Free Grok API (0 Code) – November 8, 2024

- Best Talking Photo Generator Recommendation 2025 (Tried) – November 7, 2024

- How to Have AI video Chat? Technology Explained – November 4, 2024

Leave a Reply