In January 2023, the online community was confronted with a disturbing incident that highlighted the growing concerns around artificial intelligence and digital privacy.

This controversy centered around Pokimane, one of Twitch’s most prominent content creators, and raised important questions about consent, digital rights, and the misuse of emerging technologies.

Overview of Pokimane Deepfake Controversy

The Incident

The situation came to public attention through an unexpected source: a fellow content creator’s livestream.

During a broadcast on January 30, 2023, Brandon “Atrioc” Ewing unintentionally exposed to his viewers that he had accessed a website containing AI-generated manipulated content targeting various female streamers, including Pokimane, Maya Higa, and QTCinderella.

The Immediate Aftermath

Following the exposure, Atrioc quickly ended his stream and later returned with an emotional apology.

In his statement, he attributed his actions to what he described as morbid curiosity about AI technology, explaining that he had followed an online advertisement to the site in question.

His tearful confession sparked intense discussions across social media platforms about the ethics of AI technology and its potential for misuse.

The Broader Context

This incident brought to the forefront a growing problem in the digital age: the rise of “deepfake” technology.

Deepfakes use sophisticated AI algorithms to create or manipulate digital content, often superimposing one person’s likeness onto another’s body without consent.

Hany Farid, a computer science professor at the University of California, Berkeley, highlighted the growing concern over deepfakes, noting that the issue is “undoubtedly worsening” as the creation of realistic and sophisticated videos has become more accessible through automated tools and websites.

Research by livestreaming analyst Genevieve Oh reveals a significant surge in the number of deepfake videos online, with the count nearly doubling each year since 2018. In 2018, a well-known deepfake streaming site hosted just 1,897 videos, but by 2022, this figure had soared to over 13,000, attracting more than 16 million monthly views.

What is Deepfake?

Deepfakes are AI-generated media—videos, images, or audio—that convincingly imitate the likeness and voice of real individuals.

This technology primarily utilizes Generative Adversarial Networks (GANs), a machine learning framework where two neural networks compete against each other.

One network generates content (the generator), while the other evaluates its authenticity (the discriminator).

The generator aims to create outputs indistinguishable from real data, while the discriminator attempts to identify which inputs are fake.

This iterative process results in increasingly realistic representations of people.

How were Pokimane Deepfakes created?

Creating Pokimane deepfake content is no longer difficult today. There are many open-source models and free websites that offer face-swapping features for images and videos to create such deepfakes. However, not all face-swapping websites provide deepfake pornography services.

*Disclaimer: The websites used in the following demo are only for showcasing face-swapping functionality and do not support deepfake porn services.

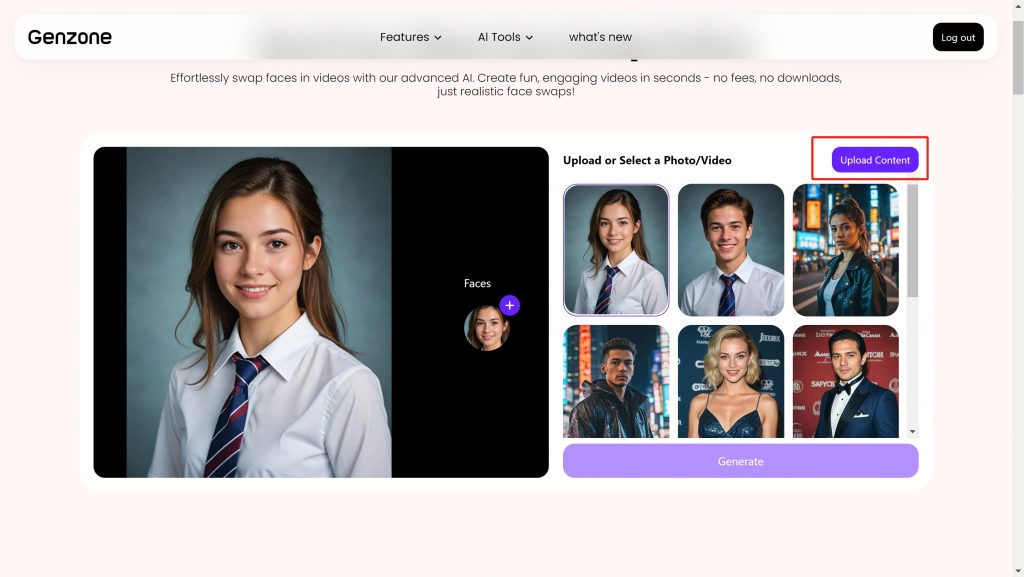

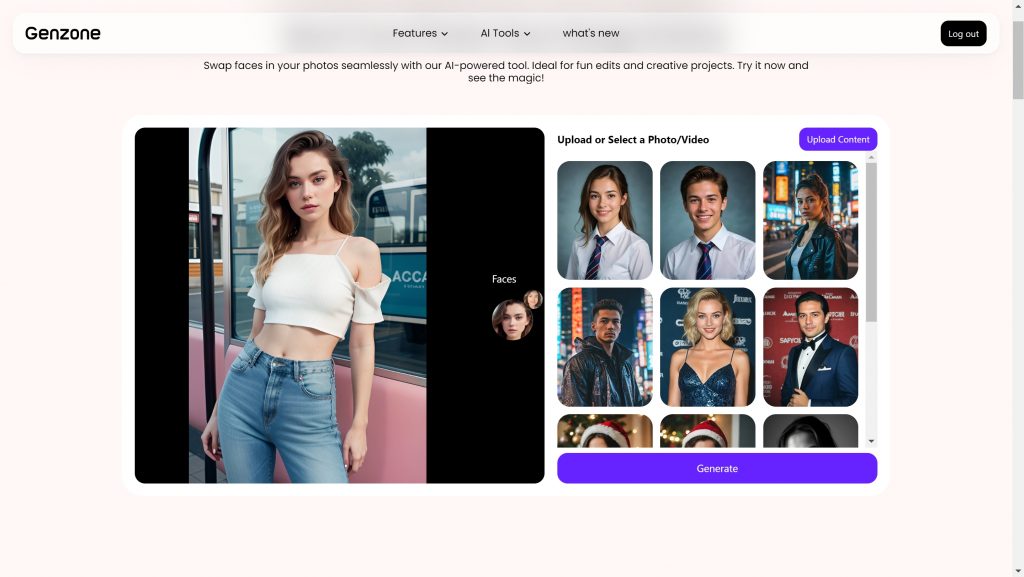

(1) Visit genzone.ai/video-face-swap sign up or sign in, it will provide you with 2 free trials.

(2) Click ‘upload content’ to upload the target video/photos

(3) Click ‘Faces’ on the right and ‘Add Face’ to upload the portrait of Pokimane’s face.

(4) Click ‘Generate’ and wait for a few seconds you’ll have the result. You can download the video/photos directly.

Reponse of Pokimane and other Steamers about Deepfakes

The deepfake incident involving Pokimane and other female streamers has elicited strong reactions from the affected individuals and the broader streaming community

Pokimane’s Response

Pokimane, the leading figure in the streaming world, has not issued a lengthy public statement but did express her feelings through social media.

She emphasized the importance of consent, urging individuals to “stop sexualizing people without their consent.”

This succinct yet powerful message resonates with the broader outrage against the exploitation of women in digital spaces and underscores the emotional distress caused by such violations.

QTCinderella’s Reaction

QTCinderella, who was also targeted in this incident, expressed her devastation over the situation.

She described her friendship with Atrioc as “irreparable,” indicating that the breach of trust was profound.

During discussions on various platforms, she articulated her feelings of violation and trauma, stating that Atrioc’s actions had created a “wildfire” of deepfake pornography.

Her emotional response highlighted the psychological toll such incidents can have on victims, as she shared her struggle with body dysmorphia since discovering the existence of these deepfakes.

Maya Higa’s Statement

Maya Higa also spoke out against the incident, drawing parallels between her past experiences and the current situation.

She recounted a personal trauma from 2018, stating that she felt similarly exploited by the creation of deepfake content featuring her likeness.

Higa emphasized that her image was used for others’ sexual gratification without her consent, reinforcing that this issue transcends mere digital manipulation, it is a violation of personal autonomy and dignity.

Why the discussion of Pokimane Deepfake is missing the point?

Initial Reactions and Apologies

On January 30, Ewing conducted a brief 14-minute stream alongside his wife, offering an emotional apology that quickly went viral.

Two days later, he followed up with a written statement on Twitter.

However, this approach drew criticism from those affected, with one victim noting, “He released that apology before he apologized to some of the women involved. That’s not OK.”

The public response revealed a troubling divide in the online community.

While some viewers were quick to accept the apology, viewing it as a mistake by “an otherwise good guy,” others pointed out that the focus on his emotional response overshadowed the actual harm inflicted on the victims.

Minimizing Real Harm

Across social media platforms, particularly on Reddit and Twitter, a concerning pattern emerged: numerous commenters attempted to downplay the severity of the situation, comparing deepfakes to simple photo manipulation.

These dismissive attitudes highlighted a fundamental misunderstanding of the technology’s impact.

Understanding the Real Impact

Dr. Cailin O’Connor, author of The Misinformation Age and professor at the University of California, Irvine, offers crucial insight into why deepfakes can be so damaging: “Whether or not they’re fake, the impression still lasts.”

She explains that while deepfakes might not qualify as traditional misinformation, they fundamentally alter how people perceive and relate to the victims.

“It’s not exactly misinformation because it doesn’t give you a false belief, but it changes someone’s attitudinal response and the way they feel about them,” O’Connor elaborates.

The harm lies in the non-consensual objectification – turning individuals into unwilling sexual objects in situations they may find degrading or shameful.

The False “It’s Not Real” Argument

The notion that deepfakes can’t be harmful because they’re not “real” reveals a dangerous misunderstanding of digital harassment.

When victims clearly express their distress and trauma, dismissing their experiences as inconsequential only compounds the harm.

This disconnect between public perception and victim impact underscores the need for better education about digital ethics and consent in the age of AI.

Problems with Current Laws and Regulations Regarding Deepfakes

The legal landscape surrounding deepfakes is fraught with challenges, as existing laws struggle to keep pace with the rapid development of this technology.

A Regulatory Wild West

In the aftermath of the Pokimane incident, many victims found themselves facing a troubling reality: the law offered little protection against this new form of digital violation.

Despite the clear harm caused by non-consensual deepfakes, the legal system remains woefully unprepared to address these technological threats.

The Federal Vacuum

Perhaps the most glaring issue is the complete absence of comprehensive federal legislation addressing deepfakes in the United States.

While lawmakers have proposed various solutions, including the promising No AI FRAUD Act and the DEEPFAKES Accountability Act, none have successfully made it through the legislative process.

This leaves victims navigating a patchwork of inconsistent state regulations, often finding themselves in legal limbo.

Two States

The stark contrast between different state approaches highlights the current system’s inadequacy. California, for instance, has taken proactive steps by implementing laws that specifically target non-consensual deepfake pornography, allowing victims to pursue damages.

However, cross the state line, and victims might find themselves without any legal recourse. This geographic lottery of justice creates an unsustainable situation where protection depends entirely on where you live.

The Enforcement Puzzle

Even in jurisdictions with existing protections, law enforcement faces significant challenges:

(1) Perpetrators often hide behind layers of digital anonymity, making tracking and prosecution nearly impossible.

The borderless nature of the internet means that someone creating harmful content in one jurisdiction can easily target victims in another, exploiting gaps in legal frameworks.

(2) Law enforcement agencies frequently lack the sophisticated tools needed to detect and verify deepfakes effectively.

As one cybersecurity expert noted, “We’re fighting tomorrow’s technology with yesterday’s tools.”

The constant evolution of AI technology creates a perpetual game of catch-up, where detection methods struggle to keep pace with increasingly sophisticated creation techniques.

(3) The human cost of these legal inadequacies became painfully clear during the Pokimane incident. Fellow content creator QTCinderella’s experience serves as a stark example: after consulting multiple lawyers, she discovered there was virtually no legal avenue to pursue justice against those who created and distributed harmful deepfake content of her.

“The lawyers all said the same thing,” she revealed in an emotional stream. “There’s nothing we can do.” This devastating reality highlights how current laws have failed to adapt to modern forms of harassment and exploitation.

Final thoughts: What can we do to prevent deepfake harm in future?

As the prevalence of deepfakes continues to rise, it is essential to develop comprehensive strategies to mitigate their harmful effects. Here are several proactive measures that can be implemented across various sectors:

Training Programs: Educate people to spot deepfakes and understand their risks through regular workshops and simulations.

Public Awareness Campaigns: Launch initiatives to inform the public about deepfakes, their misuse, and detection methods.

Detection Tools: Invest in advanced technologies to identify deepfakes by analyzing inconsistencies in audio and video.

Content Tracking: Use systems like blockchain to track the origin and changes in digital content for better transparency.

Legislation: Create laws to regulate the creation and distribution of harmful deepfakes, including penalties for misuse.

International Collaboration: Work globally to develop unified standards and regulations for deepfakes.

Industry Standards: Technology companies should adopt ethical guidelines and require consent for using individuals’ likenesses in deepfakes.

Multi-Factor Authentication (MFA): Use MFA to add security against deepfake impersonation attempts.

Crisis Communication Plans: Develop protocols to respond to deepfake incidents, addressing reputational damage and ensuring timely communication.

- Guide to Access and Use Free Grok API (0 Code) – November 8, 2024

- Best Talking Photo Generator Recommendation 2025 (Tried) – November 7, 2024

- How to Have AI video Chat? Technology Explained – November 4, 2024

Leave a Reply